Earlier this week a peer reviewed university backed research paper was released that essentially said that Polar power meter pedals shouldn’t be trusted, and that instead, SRM was better:

“The power data from the Keo power pedals should be treated with some caution given the presence of mean differences between them and the SRM. Furthermore, this is exacerbated by poorer reliability than that of the SRM power meter.”

Following that, a not-so-small flotilla of you e-mailed me the paper and asked for my thoughts. There was even a long Slowtwitch thread on it, as well as a Wattage Group discussion on it.

It wasn’t until two nights ago that I got around to actually paying the $25 to read/download the study, which was published in the “International Journal of Sports Physiology and Performance”. Like most fancy organizations, the inclusion of ‘International’ and ‘Journal’ somehow translates to the rest of us in non-academia to “must be right and smarter than I”. So much so that some cycling press even picked up the story yesterday, without apparently digging into the claims much.

Except…here’s the problem: The supposedly peer-reviewed paper was so horribly botched in data collection that the results and subsequent conclusions simply aren’t accurate.

Now, before we get into my dissection of the paper – I want to point out one quick thing: This has nothing to do with Polar or SRM, nor the accuracy of their products. I’ll sidestep that for the moment and leave that to my usual in-depth review process.

Instead, this post might serve as a good primer on why proper power meter testing is difficult. So darn difficult in fact that four PhD’s screwed it up. What’s ironic is that they actually hosed up the easy parts. They didn’t even get far enough to hose up the hard parts.

The Appetizer: Calibration

The first question I respond with anytime someone asks me about why their two power meter devices didn’t match is how they calibrated them (and, how they installed them). When it comes to today’s power meters, so many are dependent on proper installation. For example, if you don’t torque a Garmin Vector unit properly, or or don’t let a Quarq settle a few rides and re-tighten a bit, or if you don’t get the angles right on the Polar solution. Installation absolutely makes the difference between accurate and non-accurate.

In this case, they didn’t detail their installation methods – so one has to ‘trust’ they did it right. They did however properly set the crank length on the Polar, as they noted – which would be critical to proper values. They also said they followed the calibration process for the Polar prior to each ride, which is also good. Now on the older W.I.N.D. based Polar unit they were using, there isn’t a lot of ways for you to get detailed calibration information from it – but, they got those basics right.

Next, we look at the SRM side of the equation. In this case they noted the following:

“The SRM was factory calibrated 2 months before data collection and was unused until the commencement of this study. Before each trial the recommended zero-offset calibration was performed on the Powercontrol IV unit according to the manufacturer’s instructions (Schoberer Rad Meßtechnik, Fuchsend, Germany).”

Now, that’s not horrible per se, but it’s also not ideal for a scientific study. In their case, they’re saying it was received from SRM two months prior and not validated/calibrated by them within the lab. While I could understand the thinking that SRM as a company would be better to calibrate it – I’d have thought that at least validating the calibration would be a worthwhile endeavor for such a study. Having factory calibrations from power meter companies done incorrectly is certainly not unheard of within the industry, though albeit rare. On the bright side, they did zero-offset it each time.

The Main Course: Data Collection

Next, we get into the data collection piece – and this is where things start to unravel. In their case they used the SRM PowerControl IV head unit with the SRM power meter. Why they used a decade old head unit is somewhat questionable, but it actually doesn’t impact things here.

On the Polar side, they went equally old-school with the Polar CS600x, introduced eight years ago in 2007. Now, in the study’s case they were somewhat hamstrung here as they didn’t have many options with using the W.I.N.D. based version of Polar’s pedals (the new Bluetooth Smart version only came out about 45 days ago). Nonetheless, like the older SRM head unit choice it really didn’t matter much here. It’s not how old your sword is, but how you use it. Or…something like that.

Now, when it comes to power meter analysis data – it’s critical to ensure your data sampling rate is both equal, and high (The First Rule of Power Meter Fight Club is…sampling rates). Generally speaking you use a 1-second sampling rate (abbreviated as 1s), as that’s the common demonstrator rate that every head unit on the market can use. It’s also the rate that every piece of software on the market easily understands. And finally, it ensures that for 99% of scenarios out there (excluding track starts), you aren’t losing valuable data.

Note that both of these have nothing to do with the power meters themselves, but just the head units.

Here’s how they configured the SRM head unit to collect data:

“Both protocols were performed in a laboratory (mean ± SD temperature of 21.8°C ± 0.9°C) on an SRM ergometer, which used a 20-strain-gauge 172.5-mm crank-set power meter (Schoberer Rad Meßtechnik, Fuchsend, Germany) and recorded data at a frequency of 500 Hz.”

Now it’s a bit tricky to understand what they mean here. If they were truly doing 500 Hz, that’d be 500 times a second. Except, the standard PowerControl unit doesn’t support that, only a separate torque analysis mode which best I can tell max’s out at 200 Hz. What would have been more common, and I believe what they actually did is .5s on the head unit. This means that the head unit is recording two power meter samples per second. That by itself is not horrible, it just makes their lives a bit more complicated as SRM is the only head unit that can record at sub-second rates. So you have to do a bit more math after the fact to make things ‘equal’. No worries though, they’re smart people.

What did they set the Polar to?

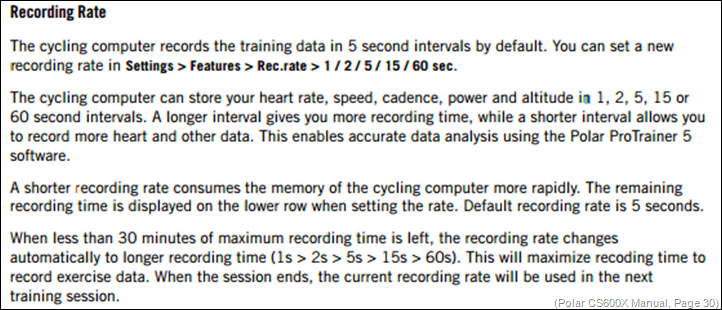

“Power measured by the pedal system was recorded using a Polar CS600X cycle computer (Polar Electro Oy, Kempele, Finland), which provided mean data for each 5 seconds of the protocols.”

Hmm, that’s strange. They set the Polar to 5s, or every 5 seconds. That means that it’s not averaging the last 5 seconds, but rather taking whatever value occurs every 5 seconds, ignoring whatever happened during the other 4 seconds.

Of course what’s odd here is that the CS600x for all its faults is actually perfectly capable of recording at the standard 1s frequency that the rest of the world uses for power meter analysis. The manual covers this quite well on page 30, so it’s certainly not hidden (it can also be set to 2s, 5s, 15s, or 60s. Now oddly enough, they do seem to understand the implications of the situation, where later in the study they note:

“…This was due to the low sampling frequency and data averaging of the cycling computer that accompanies the Keo pedal system. This was not sensitive enough to allow accurate determinations of sprint peak power.”

So…why didn’t they simply change the frequency? Perhaps because 5s is actually the default. Or, perhaps because they didn’t even realize you could change it. Either way, I don’t know.

But what I do know is that it would turn out to be the fundamental unraveling of their entire study.

(Note: There are some technical recording aspects that can actually make things even worse above, such as accounting for how each power meter transmits the actual power value based on number of strokes per second, which varies by model/company. But, for the purposes of keeping this somewhat simple, I’ll table that for now. Plus, it just compounds their errors anyway.)

Dessert: A sprinting result

So why does recording rate matter? Well, think of it like taking photographs of a 100m dash on a track. And from only those photographs you wanted to give people a play-by-play of the race afterwards. That’d be pretty easy if you took photos every 1-second, right? But would it if you only took photos every 5 seconds?

In any world class competitive men’s 100m race that’d only result in one photo at the start, one in the middle, and one just missing the finish tape breaking (since most are done in slightly under 10-seconds).

That’s actually exactly what happened in their testing. See, their overall test protocol had two pieces. The first was a relatively straight forward 25w increment step test. In this test they incremented the power by 25w every two minutes until exhaustion. This is a perfectly valid way to start approaching power meter comparisons – and a method I often use indoors on a trainer. It’s the easiest bar to pass since environmental conditions (road/terrain/weather) remain static. It’s also super easy to analyze. In this test section, they note that the SRM and Polar actually matched quite nicely:

“There was also no difference in the overall mean power data measured by the 2 power meters during the incremental protocol.”

That makes sense, with two minute blocks and using only median data (more on that later), they’d see agreement pretty easily. After all, the power was controlled by a trainer so there would be virtually no fluctuations.

Next, came the second test they did, which was to have riders do 10-second long sprints at maximal effort, followed by 3 minutes of easy pedaling. This is where they saw difference:

“However, the Keo system did produce lower overall mean power values for both the sprint (median difference = 35.4 W; z= –2.9; 95% CI = –58.6, 92.0; P= .003) and Validity and Reliability of Power Pedals combined protocols (mean difference = 9.7 W; t= –3.5; 95% CI = 4.2, 15.1; P= .001) compared with that of the SRM.”

Now would be an appropriate time to remember my track photo analogy. Remember that in this case the SRM head unit was configured to be measuring at every half a second, so during that 10 seconds they took 20 samples. Whereas they had the Polar computer measuring at every 5 seconds, so it took 2 samples. It doesn’t take a PhD to understand how this would now go horribly wrong. Of course the results would be different. You’re comparing two apples to twenty coconuts, it’s not even close to a valid testing methodology.

They simply don’t have enough data from the Polar to establish any relationship between them. In the finish line analogy, we don’t even have a photo at the finish – they completely missed that moment in time due to sampling. Who’s the winner? We’ll never know.

(Speaking of which, in general, I don’t like comparing only two power meters concurrently. They doesn’t really tell you which is right or wrong, but rather that someone is different. To at least guesstimate which is incorrect, you really need some other reference device.)

Tea & Coffee: Analysis

Because I live in Europe now, a tea or coffee course is mandatory for any proper meal. And for that, we turn to some of their analysis. Now at this point the horse is dead – as their underlying data is fundamentally flawed. But still, since we’re here – let’s talk about analysis.

In their case, throughout the study they only used ‘Mean’ and ‘Median’ values for comparisons. Now, for those who have long forgotten their mathematics classes, ‘Mean’ is simply ‘Average’. Take the sum of all values and divide it by the number of values. Where ‘Median’ is taking the middle value of all the data points collected.

The problem with both approaches is that when it comes to power meter data analysis – it’s actually the easiest bar to pass. You’ll remember for example me looking at the $99 PowerCal heart-rate strap based ‘power meter’. Using the averaging approach, it does quite well, as when you average longer periods like 2-minute blocks at a steady-state value (as they did in the incremental test), it’s super-easy to get nearly identical results (as they did in the study). It’s much harder to get accurate results using such a method though in short sprints, due to there being slight recording differences and protocol delay.

Instead, when comparing power meters you really want to look at changes over different time periods and how power meters differ. You want to understand things like how and when they are different, as well as why they might be different. For example with their approach of 5s recording, they wouldn’t have been able to properly assess a 10-second sprint, nor variations that would have occurred at the peak power output.

Which, ultimately takes us to the most important point: You have to understand the technology in order to study it.

I’m all for people doing power meter tests, I think it’s great. But in order to do that you have to understand how the underlying technology works. That means that you have to understand not just the scientific aspects of the outputted data, but also how the data was collected and all of the devices in the chain of custody that make up the study. In this case, there was a knowledge gap at the head unit, which invalidated their test results (a fact that I don’t think even SRM would dispute).

Lastly, it’s really important to point out that the vast majority of accuracy issues you see in power meters produced these days aren’t easily caught indoors. Too many studies are focusing on testing units there, when in fact they need to go outdoors in real conditions. They need to factor in aspects like weather (temperature changes and precipitation), as well as road surface conditions (cobbles/rough roads). These two factors are the key drivers today in accuracy issues. Well, that and installation.

I’ve had a post on how I do power meter testing sitting in my drafts bin now for perhaps a year or so, perhaps I’ll dust it off after CES and publish it. And at the same time, I’ve also been approached by a few companies on getting together and establishing some common industry standards on how testing should be done – for old and new players alike. Maybe I’ll even get really all New Year productive and take that on too.

In the meantime, thanks for reading!

FOUND THIS POST USEFUL? SUPPORT THE SITE!

Hopefully, you found this post useful. The website is really a labor of love, so please consider becoming a DC RAINMAKER Supporter. This gets you an ad-free experience, and access to our (mostly) bi-monthly behind-the-scenes video series of “Shed Talkin’”.

Support DCRainMaker - Shop on Amazon

Otherwise, perhaps consider using the below link if shopping on Amazon. As an Amazon Associate, I earn from qualifying purchases. It doesn’t cost you anything extra, but your purchases help support this website a lot. It could simply be buying toilet paper, or this pizza oven we use and love.

At times like this I really wish I could say something intelligent. Anyway, this really is a worthwhile post which highlights why people should always use multiple sources when researching products and how good they actually are. My first port of call would always be your site. Keep up the good work, not sure how you always find time..

Happy new year..

500 Hertz is five hundred samples per second, Ray, so the SRM is picking up 5000 samples in 10 seconds. Doesn’t alter your conclusions about the sprint at all, obviously, but you might want to change that.

Replying to my own comment – is that the internal sampling rate of the meter, as distinct from the rate the SRM head is receiving from it?

Funny, I didn’t even catch their written error as well. The SRM head units doesn’t have any recording rate close to 500 samples per second in standard mode. It can best as I know max out at 200 Hz in Torque Analysis mode, but it doesn’t actually sound like they were using that. I just updated that little section to help clarify that a bit for folks.

That’s all a bit ambiguous, but, as you noted, doesn’t much matter with respect to the issue on the Polar side.

As to your own question, yes, each power meter has different methods of how it samples internally versus how it transmits. Some do so based on crank rotations and averaging the power of each rotation and transmitting it then, and some do it on a per 1-second basis and transmitting it then.

Was there also an error in their description of the Polar testing methodology? The say the Polar was providing the mean for each 5 seconds of the protocol, but, you state it’s just measuring every 5th second and not averaging. Again… it doesn’t make any difference in the final result, but, the engineer in me craves accuracy.

Correct, in 5s recording mode it’s just sampling once every 5s – ignoring whatever data occurred in between that. Very similar to how ‘Smart Recording’ works on a Garmin, it’s just waking up and recording whatever happened in that one second of time.

And yup, also correct that they assumed it would be averaging it over 5 seconds.

I thought Smart recording on the Garmin just impacted the recording rate, not the data computation. Like I am pretty sure that distance is being computed constantly (many times per second) and doesn’t necessarily just equal the straight line distance between the two “recorded” data points. Isn’t this how it knows when to record each data point? Since if you are going straight vs lots of turns, it will dynamically change the recording frequency. Another way to put it, it’s computing all the data points, just throwing away the ones that don’t add a lot of value.

Or are you referring to the extended recording of the Fenix and 920 where it shuts down the GPS to extend battery life?

Smart Recording: Distance data yes, but not sensor data. For that it’s point in time.

UltraTrac: For Fenix and FR920XT is point in time for distance and sensor.

Without reading the article, it sounds as if they might have been using one of these and collecting reference data at 500 hz.

link to srm.de

Matt,

I think you are correct. They specifically mention ergometer: “Each participant was required to complete 2 laboratory cycling trials on an SRM ergometer (SRM, Germany) that was also fitted with the Keo power pedals (Look, France).”

That could explain why, the older SRM Head Unit (comes with the unit from SRM & just needed for display while attached equipment records data @500Hz). Makes sense for University to get a new Ergometer for their Human Performance/Sport Activity Lab and this was their first use of the machine ( “unused until the commencement of this study”).

Just a WAG, but I was a Human Performance Lab research assistant while in college and can see that scenario.

Ray,

If you want to try to validate their experiment I’ll gladly donate my slightly used CS600x if you can get SRM to donate a new Ergomenter to the the DC Rainmaker Institute for the Study of Power Meters. LoL

SteveT

Sounds like they meant 500ms.

So basically they didn’t read the manual properly for the Polar CS600x. They thought it records an average power over a given period, when it in fact records power at a given instant. And this paper made it through peer review..

Do you honestly expect peer-reviewers to know the intricacies of every single activity monitor? They can’t, They are more focused on evaluating the technical methods that they are skilled in. They can’t be motivated to do the same level of research (actually more in this case!) than the researcher who submitted the work!

This is exactly why peer-review is flawed. Crowd-sourced peer review has much more potential if controlled appropriately.

I for one would welcome standardized testing for pm’ s. Until that happens we are at the mercy of marketing claims of ” 1% scientifically tested accuracy” when in real life environmental conditions it ain’t even close!

This is very interesting. I’m interested in why they chose to compare power meters when it is readily acknowledged that readings from different devices will be slightly different although true to themselves. It is also interesting that they used “mean and median” averages when perhaps a more appropriate solution would be to test for statistical significance against the 1-2% accuracy claims made by most power meter companies.

This seems like a completely wrong approach to collecting data in the first place. There should be no head unit involved whatsoever, instead they need to be recording the raw data that is sent from the meters, either over ANT+ or BLE. Of course the problem here is Polars proprietary W.I.N.D nonsense.

This would allow reliable analysis of the meters instead of analyzing *whatever the head unit did to the meter data*.

Generally, if N<1000, I tend to ignore the results, the N=2 for the 10 second testing here is just silly. They must have known that, I was told it maths class when I was 11 years old.

The thing that bothered me the most about the summary that was available for free was this line.

“Furthermore, this is exacerbated by poorer reliability than that of the SRM power meter.”

Did they actually have any reason to actually say that the Polar had lower reliability?

Well done. I saw the posts about the test, but didn’t buy the paper. One thing jumped into my eyes though: did they have only one sample of pedals and SRM? This seems problematic for a scientific test, that claims a general result. I am by far no scientist, but it seems problematic if that is the case.

Ps. Keo power owner – and not unhappy with them, but haven’t tried other system.

Pps. The new PC8 has been rumored to have BTLE. Wonder if this would work with the keo power pedals? Just in case polar v650 ends as vapourware.

On the PC8, I can’t quite keep track whether or not they plan to support BLE sensors. It’s gone back and forth a few times.

Firstly a caveat – my science education is in biology, not specifically human physiology or sports science. But with that stated… what I can’t see in any of this commentary is whether the study was conducted double-blind, does anyone know? Ideally you’d want one team setting up all the equipment properly in a way that the people cycling had no idea which kit they were using, and potentially even blind it in other ways just to check no underlying bias was creeping in (e.g. separate out the setup and analysis work too). Also, in terms of sample size, did they just use one example of each meter / head unit, or did they use a selection? Because if it was just one of each, that feels like a very dubious analysis – you can’t really comment on the accuracy beyond those immediate units used.

It was just one unit of each, with the researchers treating the SRM ergometer (note that this is not just an SRM powermeter, see here: link to srm.de) as a reference and comparing the pedals to that reference.

Their problem with the reference is trusting the factory calibration without more detail, but having just one reference in itself is not necessarily a bad thing (think of a 20 gram mass that’s certified to 0.01g and used/stored properly). Having just one set of pedals is definitely not ideal.

You don’t need double-blind anything for what they’re trying to do. Just think of it as using 2 powermeters at once, all of the time.

Using 5 second sampling on the head unit for 10 second sprints and claiming poor accuracy is stupid beyond belief. Papers like this make me question why I didn’t use my smarts in fields other than physics!

Wow. The journal’s table of contents for this month is full of articles that are in the same direction (sports measurement techniques), so I’m baffled how the sample frequency mismatch got through the reviewers. Normally I’d also call out the co-authors, but I’ve (co-)written enough articles to know that by draft 87 nobody is re-reading it anymore. (Heck, by draft 8 co-authors are just making sure their names are still spelled correctly!)

2nd: At this point I’d think you’d be invited to be on the editorial board of a journal like this. Seriously, granting their expertise on the physiology side of things, it’s tough to expect them to also be experts in (rapidly evolving) sports technology market.

Finally, they could have spared themselves a LOT of embarrassment if they had reached out to either PM company first. Or, heck, the lead author is on Strava, and I’ll take a leap of faith and suspect he also has looked at dcrainmaker.com. He could have simply asked you to look it over before he published it.

Ray, I hope you will consider submitting your critique to the journal as a “letter to the editor”. This is a common practice in the scientific community and is a critical part of the peer review process, especially when studies’ conclusions or methods are disputed by outside experts ( such as you).

I second this.

Third.

The issue here isn’t with “peer review” per se, it is with a lack of understanding in the field. In all likelihood the reviewers blew through the methods section and didn’t catch the different recording rates (or if they caught them didn’t know the implication). A standard method (like you say you’re thinking of describing) should go a long way to solving this.

I came here to make the same suggestion. You should definitely write a letter to the editor. Good insights that can help improve the science in this area. Quite frankly, I seriously doubt anybody in a research institution lives and breathes this stuff like you.

he problem, Ray, is that the peer group were probably exercise physiologists and statisticians, not electronics experts. So though they actually blew the project on their data set up the peer group is likely not going to catch this highly specialized piece of information that the whole thing hinges on. Likely not one reviewer had ever used the Polar pedals. As well in journals it is not unusual for the next issue to have a counter point to a controversial study, or in some cases when the researchers and journal note that they got it wrong to post a correction or reassessment. It is the old story that just because it got published it is not fact until it is reproduced multiple times. So tough the study hits at an issue that is important for journals to ensure that the measurement tool works before using it and they felt they were doing a service for the community of researchers with a warning to be wary of a certain tool and to ensure it is used properly so that work from different studies using different tools can be compared. So what they might be saying, (having not read the study in the detail you have) is beware? Sloppy research happens all the time, sometimes it gets through peer review due to lack of specific knowledge. That does not detract from other studies but it is always important to read with a good dose of scepticism. I have read many times the explanation from a media source to only get the paper and find out that their conclusion was not what the paper or the researcher had said or felt. So we need this sort of review you have done and hopefully you will send your review to the main researcher for them to see their errors, they are easy to contact through the email noted on the paper as principal researcher. Just one final point, academic research rarely is conducted outdoors due to the many factors that not controllable. So for that reason the study was conducted indoors as would the research project in which the power meter would likely be used. In that case they are not wrong, they are just doing a different study than what you do, research usage vs everyday usage. As one of my professors used to say, good preparation and a good question is half way to a good answer.

Having done multidisciplinary research, I know full well and these researchers should have known too to tap someone with “electronics” expertise to help them. Certainly the researchers could do with a little education on measurement and sampling theories. To compare data collected at different sampling rates is an embarrassing mistake.

Having been a full time R&D engineer for a decade, one corner stone of a study is for the outcome to be repeatable by anyone else following the same protocol for testing. Interestingly, I’ve seen where studies executed with multiple scenarios (as in the variations in parameters) which would give consistent results for the given setup. Yet selectively picking setup comparisons for publication can easily sway the appeared results. It would be interesting to know who sponsored the study, and separately the source trail for funding.

I want to assume positive intent, yet even as a person that can can do the math, I’m rather indolent when there is a choice to collect data at the same rate simply because it is rather painful to have reviews like this pick my results apart.

Nice job Ray

I’d hate to have to prove/defend my thesis if you were on the review board. Well done, this should have never made publication!

What are SRM/Polar/”International Journal of Sports Physiology and Performance” saying about this debacle?

“You have to understand the technology in order to study it.” And that’s it.

Amazing job. Thanks very much for your insights.

Keep up the excellent work.

Numbers are great but you sure can use them to tell a lie.

Well… I could write a large article about this post and the article as a whole as I actually peer reviewd it and reproduced the same result in some of the tests in my own cellar as in the article so in that sence it was a correct article with correct data.

Sampling rate was a problem and lack of a specifik standardization on power meter testing and setup. All this was discussed before publishing.

The power meters were not compared side by side since the collection of the data was different. It was not an article about which power meter to buy or which world be better for the consumer at all. I think you are jumping the gun and drawing conclusions to fast. The authors had no intention to say this or that power meter would be better.

For comparison the test would hade used the same data on the same head unit and with known power output in a sterile environment on a complete nonhuman testrig.

This was not the case so comparison between polar and SRM based on the article alone would be redicoulus. The authors of the article would agree 100%.

Eric

The paper abstract notes this: “Purpose: This study aimed to determine the validity and reliability of the Keo power pedals during several laboratory cycling tasks.” So it does seem that the results of the paper were intended to make some value judgement of the Keo power pedals — and I think you are incorrect to say that “The authors had no intention to say this or that power meter would be better” since they explicitly say that is what they are doing. They are attempting to show the validity / reliability, and that can only be done with respect to some reference (in this case, another power meter).

I’d be interested to know what your review process consisted of: did you simply repeat their (evidently flawed and meaningless) experiment, or did you actually analyze their methods and compare with other similar work that has been published (such as the extensive analyses available on this site)? I don’t doubt that the results are repeatable; it’s whether those results are useful in any way for achieving the stated purpose.

I hope that Ray finds the time to submit his analysis to the journal; it may be that there are other factors involved which only the authors will be able to clarify.

Thanks for responding Eric (#1).

To echo Eric #2’s point, I’m not clear what the point of the study was then, since the summary abstract clearly defines a ‘winner’ and a ‘loser’ based on the methodology they selected. And then clearly says one product isn’t capable of being accurate.

I’m also unclear what you mean what you say “he power meters were not compared side by side since the collection of the data was different”. How else would one compare them then? I (and many others) do that for every product on the market just fine, even dealing with converting data from non-ideal scenarios like the CS600x.

Ultimately, a large portion of my concern would have been alleviated if someone just read the manual and set both products to 1s. It still would have left for some complex data analysis afterwards, and I still would have said that the structure of the test wasn’t relevant to people that ride outside, but at least it would have been usable data to start the indoor analysis from.

Time spent might be more valuable publishing a proposed standardized method for testing power meters per category in lieu of challenging a single set of results out of a journal. At least the outcome benefits everyone in the long run, including the researchers cited.

If nothing else the article provocatived thoughtful dialogue.

Thanks for another interesting article.

Does the paper mention who the intended audience is or who provided the funding for the study or why the study was performed? The “Purpose” on the main web site seems a bit vague or even misleading as I think the study could have been done without comparing it to another product.

Since the study was to measure a measuring tool, they would have done themselves a favor on the credibility side by using the same measuring tool to do the measuring of both measuring tools… I understand each power meter head has features and functions specific to each meter, but then you get into the problem of what part of the measuring actually has the deviations and how would those deviation be normalized? In general it does not seem that they had an understanding of their measuring tool (the head unit). So in my opinion that is a flaw that calls the paper into question in my mind.

As long as each device is consistent for a given condition, and the consistency is understood, that is more important over if product A shows the exact same results as product B… and the stdev of the population is reasonable… I wonder if the article provided any statistical analysis of data quality (stdev, modality, confidence factor…)?

At least from my experience using power meters primarily on mountain bikes, my SRM remains rock solid, my Stages are definitely good enough (once I put tape over the battery door to keep water out), and the Quark did not like water splashes and did not auto calibrate with altitude and temp changes very well (I live, work, race, and train in the Colorado Rocky Mountains), and the Power Tap had the most variance in numbers but was also good enough. But for the machinery turning the power meters (me), there tends to be a high amount of variance… ha!

Hey Ray,

Most journals including “International Journal of Sports Physiology and Performance” have a “Letters to the Editor” article format that allows one to raise these kinds of concerns see: link to journals.humankinetics.com). They are usually short and to the point, I’d suggest you submit one to correct the record.

Vinny

However, the Keo system did produce lower overall mean power values for both the sprint (median difference = 35.4 W; z= –2.9; 95% CI = –58.6, 92.0; P= .003) and Validity and Reliability of Power Pedals combined protocols (mean difference = 9.7 W; t= –3.5; 95% CI = 4.2, 15.1; P= .001) compared with that of the SRM.

According to the cited paragraph, for the sprint experiment, the median difference is 35.4W, but the 95% confidence interval is between minus 58.6 and plus 92.0. The same in the different notation: 95% confidence interval is 35.4W +- 75.6. The conclusion “Keo system did produce lower overall mean power value” is clearly wrong here, because the confidence interval tells us “we can’t tell whether the Keo system produce lower or higher overall mean value”.

I think, that the statistics (if computed correctly) really shows lack of samples in the sprint test, the confidence interval seems to me huge compared to the measured value. In my opinion, the conclusion is incorrect for the sprint. Is in the paper mentioned a standard deviation of the samples?

Disclaimer: I didn’t read the paper, so I’m only guessing the meaning of the numbers :-)

Ray,

Have you sent this article to the journal? They might (should) publish it in the next issue.

I wonder who peered reviewed this and let it pass… I hope that you let the editor know, it discredits the journal so badly!

Anne,

I’m with you. I do look to the Human Kinetics publications to keep up on what the latest training and exercise physiology so it really raised my eyebrows to see them let this get by-they have (or can access) the expertise needed to keep the scientific rigor bar high.

That’s also why I come here too. Its gotta work in the real world too!

SteveT

You made a mistake in your analysis Ray. This sentence, which seems to be the essence of your criticism, is simply not true: “That means that it’s not averaging the last 5 seconds, but rather taking whatever value occurs every 5 seconds, ignoring whatever happened during the other 4 seconds.”

They did take the average. The word “mean” below means average. They took the average over 5 seconds, not final value, as you quoted yourself: “Power measured by the pedal system was recorded using a Polar CS600X cycle computer (Polar Electro Oy, Kempele, Finland), which provided mean data for each 5 seconds of the protocols.”

I hope you will correct your article above.

As Graham said…. Also Ray there is simply no way your interpretation of recording interval as a snapshot in time would be correct anyway since say setting recording interval to 60secs for power, cadence & speed would be totally useless. Just think about it :)

If they compared sprint power for every 5 secs interval based on polar recordings that would also be correct although as they noted a low resolution.

Keeping 5secs recording interval also makes sense when you check the max recording time for the device. For 1sec is just below 4hrs with power, cadence, speed and gps.

Lastly, if there is already a diff in a lab then there is no need to do more testing in the field. No? :)

So not as bad as it looks at first… but I have not read the paper.

Graham – You’re making the same mistake they did. They said “which provided mean data for each 5 seconds of the protocols.” – but, it (the CS600x) fundamentally doesn’t do that. The product doesn’t work that way. It doesn’t average, it’s just a single point in time, every 5th second. Thus, no mean, no average, no median. Just one data point that’s not averaged over five seconds.

WG – I’m not sure I follow what you’re saying as I think you’re basing your thought on Graham’s incorrect thought. But, no, keeping 5s recording interval never makes sense for power meter data analysis.

Ray, have you checked this with Polar?

The device allows 60secs recording interval as well. Such a long snapshot based recording interval does not make any sense for power whatsoever. None. The recorded power would have been totally random and that would be on Polar to allow this nonsense, so not possible IMHO.

How is that on Polar as opposed to the authors? Isn’t it their duty to understand the technology they are using for their measurements? How is their lack of knowledge of the instrument, and assumptions about how they work, not their own fault? Plus the fact that Polar CS600 head units don’t average isn’t new and has been discussed on cycling forums before. Plus if they just lay their every half-second SRM ergometer data down next to their Polar data, they could probably see what was going on. Plus, none of this explains why they didn’t just change it to 1s even if they thought 5s was averaging.

Ray’s summary of the CS500 simply capturing power every 5th second, and not the average of the last 5 seconds, is correct. I’ll say that with 99% certainty. I licensed the chain tension based power meter technology Polar used, and had discussions with them about that exact problem. I know 100% that the every-5th-sec recording protocol was used on the earlier S710 etc. watches, and I’m 99% certain that carried forward to the CS600 unit. Polar approached (approaches?) data recording from a heart rate-centric perspective, where such recording isn’t unreasonable (and from bygone era when head unit memory was precious&limited). Of course, recording power numbers that way makes no sense.

I agree with Ray that the research is junk based on this problem. When independent blogs like this one, or crowd-based knowledge like the wattage list can understand and identify such problems better than academic peer-review, it reduces my confidence in such journals and their publishing processes.

Thanks Alan. We need some confirmation from Polar that’s indeed the case.

Actually, burden should be on the authors. Did they have confirmation on how the protocol works before they chose it?

Also, and echoing what has been said ad nauseum, why not simply choose 1s intervals? The only reasonable explanation is that they didn’t know they could. If so, I hardly believe they investigated the protocol since they didn’t even RTFM.

I’m also curious about the declaration of (conflict of) interest.

Ray – thanks for a great analysis.

Something I’ve often wondered about power meters is how to generate an ‘absolute’ reference point. Every test I’ve read depends on a human riding a bike as the input’, which will always be flawed. Since we can’t yet order a pedaling robot who will generate perfectly linear (and calibrated) power – we may be stuck with smelly humans.

Is there any possibility of using a static weight to generate a load? Say a 50Kg weight suspended on a pedal? I know Power has a motion component ( P = fv ), but some known reference point seems achievable. Maybe if you did a controlled rotation of the pedal/crank from TDC to BDC over a reference period you would have an ‘absolute’ reference?

Static testing of power meters’ ability to accurately report torque applied has been used for decades, and has also been assessed in the scientific literature, with well over 150 SRMs tested with this method in one paper assessing it’s validity. There are also dynamic testing rigs available, e.g. the Australian Institute of Sport have such calibration rigs available and have published research on power meter accuracy testing. Even so the AIS with a great rig made some mistakes in their testing and analysis methodologies, so the present authors are not alone!

No testing rig provides a power input via a pedal interface in a pseudo-sinusoidal pulse like manner that a human does, let alone be able to do so with a high degree of accuracy.

Static testing is the most common and reliable means for users to validate their power meter is at least correctly measuring torque when velocity is zero. If a meter cant do that right, well not sure I’d believe it can do it any better when moving. There are a number of ways to do this, but hanging precisely known masses from the pedal spindle of a forward facing horizontal crank arm of known length with the rear wheel of the bike held fixed is pretty easy to do.

Rotational velocity is another factor, with electromechanical reed switch based meters having the most reliable method and we can reasonably presume they measure cadence accurately (although they really should report it with higher precision than they presently do – i.e. 3 significant figures). Meters that use accelerometers to measure rotational velocity still face some challenges to provide accurate cadence data, but also have unrealised potential to provide more frequent crank velocity data.

One of the challenges to testing is dealing with the various locations the forces and velocities are being measured, and how this can be compared with a standard, as well as distinguishing between performance in the lab on an ergo and out on the road with it’s greater natural variability.

e.g. say Brim Bros actually bring out their cleat based power meter. What test rig could one possible use to provide a standard power input to compare it with? Hence we are left with making comparisons with other power meters.

IMO, testing alongside a well calibrated and verified SRM *Science* model is your best bet if the power meter being compared is itself not a crank spider based model. I agree with Ray that the authors really should have verified this SRM’s slope calibration, and have examined and reported on it’s torque zero behaviour.

Hi Ray,

I agree with many above that you should submit a response to this article. One things is all of us complaining here about the study, and another is actually putting your very reasonable and scientific arguments for the rest of the readers and editorial board of the journal to read and appreciate.

I review for multiple (though more frequently one) medical journal. I’d hate to miss such errors in a medical study.

If you need help putting something together, will be happy to help.

Thanks for the passion on the topic Ray, something we both share!

Reading the comments, it seems there’s a little confusion between the torque sampling rate and the rate at which power data is recorded.

Also I see some are also struggling with the difference between what is a longer period for the sample average (which is sort of OK), and down-sampling of the data (which is not). The latter’s only reason for existence is for head units with limited memory capacity. The old Powertap Cervo (little yellow computer) also had this rarely used option, although it had better memory capacity than the Polar CS unit or the old Polar watches.

In this day and age if a power meter head unit has such limited memory capacity that to record more than a few hours of power meter data it needs to down-sample, then IMO it’s not fit for the purpose of most users.

The sampling and recording rates are not the only important distinctions either, as no crank/pedal based power meter actual calculates power based on a fixed time sample, rather power is calculated on a variable duration event basis, that being the time taken to complete a full revolution of the crank. The power meters and/or head units (which depends on the model of meter) then take this variable duration power data and “force” it into a fixed duration recording rate.

For the SRM, it will average the power value for the most recent number of completed crank cycles that concluded during that time sample period.

So if you are recording power at a 1 second rate (1Hz), and pedalling at around 90rpm say, then each 1 second sample may contain power data averaged from the most recent 1 or 2 crank revolutions and not the last full second at all. If you are recording power at 0.5 seconds (2Hz), then clearly not every time sample will contain a new completed crank revolution, so if a new crank revolution event hasn’t occurred since the last one, then the previous power value is repeated for that 0.5 second data point.

I don’t know how other crank/pedal based power meters perform that vital step of taking variable duration power data and generating data stream at 1Hz (or any other chosen recording rate). This doesn’t apply of course to Powertaps that do actually average hub torque data samples using a fixed duration. That as we know creates other issues, most notably introducing an artificial variability in power data due to aliasing.

I might do an item at some stage as well to try and clarify this.

SRM power meters as you point out sample torque at 200Hz (not 500Hz as mentioned in the paper) and if using a Powercontrol IV (not many of those left in use!) then you have the option to record power data streamed at 10Hz. Now what happens with 10Hz recording is it will repeat power values a lot (as explained above – it updates only when a crank revolution has been completed), but it will pick up much earlier when the crank rotation event occurs, and so in that manner it does provide more accurate short duration power data (e.g .for acceleration scenarios).

From SRM Powercontrol V and later, the power data maximum recording rate became 2Hz.

The Torque Analysis system can only provide the raw crank torque data at 200Hz, but not power data at 200Hz.

Ray, talking about SRM when can we expect a review of the SRM Electronic Indoortrainer link to srm.de from you (as living in France it would be much more easier to get hold on than in the US :-). Maybe also to be used as reference.

Wow, that’s just sad. So many errors, complete lack of logic and what hardly qualifies for analysis. I know this kind of thing happens all the time in published studies of every discipline, but it amazes me that people can be that stupid and sloppy. I’m not even a scientist and the ideas of comparing to a reference point, and aligning sample rates when comparing them, are obvious to me. And I bet the people involved are going to go on publishing (or positively reviewing) sloppy studies in the future because they don’t want to pay attention to the details.

Well, thanks for pointing these things out…it does a lot of damage when people read and trust these studies.

Anybody who read the study,

Was there an analysis of errors discussed in the paper at all? Did they even try to account for the differences or just leave it at “The power data from the Keo power pedals should be treated with some caution. . . ” ?

ST

Here:

Discussion

The aim of the current study was to assess the validity and test–retest

reliability of the Keo power-pedal system during 2 types of commonly

used laboratory exercise protocols. The results of this study

indicate that the Keo power-pedal system provides reasonably valid

data for the measurement of power output across a wide range of

intensities typically used by recreational and competitive cyclists

(~75–1100 W). The validity and reliability of the pedal system

were comprehensively assessed using a variety of recommended

statistical procedures.12,16 This is the first study to evaluate this

new power-meter system when compared with the recognized and

extensively used SRM device1,17–19 for the measurement of power

output during laboratory-based cycling tasks. Concurrent validity

assessment of the pedal system showed that the power data agreed

well with those measured by the SRM, providing small TE, but the

Keo system should be treated with some caution given the presence

of some statistical differences between the device and the SRM.

These statistical differences in mean power values were observed

between power meters during the sprint (378.6 ± 283.8 W and 395

± 285.1 W) and combined protocols (263.0 ± 216.1 W and 272.7

± 218.7 W) for the Keo and SRM. The mean differences of 16.7

W (4.3%) and 9.7 W (3.2%) may be meaningful when comparing

data from the Keo pedal system with those obtained using an

SRM ergometer. Given the target population for this device, we

used a relatively heterogeneous group of participants, and in this

group such differences between power meters may actually be less

important than for an elite rider who is likely to produce less variable

power data. Despite the differences, the agreement analysis

does suggests that the power data measured by the pedal system

is generally reflective of the SRM, which is regarded as a “gold

standard” for power measurement during cycling.8,18

The reliability of the pedals was, however, more equivocal.

Although the TE statistics support the reliability of the pedal devices,

the mean differences between T1 and T2 and poorer agreement

in both the incremental and combined protocols (21 and 18.6 W,

respectively) are important. This is of particular interest given that

the corresponding T1 and T2 differences for the SRM were just

1.3 and 0.6 W, respectively. This may therefore represent physiologically

meaningful differences, especially if they were observed

during the assessment of a rider’s performance. While the powerpedal

system may have practical potential as a tool for use by recreational

riders wishing to increase the formal monitoring of cycling

training, for researchers, coaches, or athletes requiring precise power

data, its use may be somewhat limited. Caution should therefore be

exercised if this system is being used for test–retest study designs.

It is important to acknowledge that this study used a relatively

small participant sample size but that the study design provided

a large number of measurement points over a variety of exercise

intensities and modes of cycling. This collectively allowed assessment

of the power-output data at magnitudes that are typically

generated by the majority of athletes, especially those who are most

likely to use this system (peak power ranges identified by the SRM

were 686 to 1147 W and 200 to 350 W for the sprint and incremental

protocols, respectively). These measured power data closely

resemble the power-output data previously used in the evaluation

of power-measurement equipment in cycling,4,17,18,20 particularly

in the assessment of validity and reliability in a range of other

power-measurement devices.7,21 However, the reported power data

for the repeated sprints in the intermittent protocol have a smaller

magnitude than those previously reported in cyclists,22 as well as

active males.23 This was due to the low sampling frequency and data averaging of the cycling computer that accompanies the Keo pedal

system. This was not sensitive enough to allow accurate determinations

of sprint peak power. The differences in sampling rate between

the power meters may have implications for the interpretation of

these data, particularly at high power outputs, when peak power

may instantaneously be large, and detectable on the SRM but not

via the pedal system. This is unfortunate, since peak power during

repeated-sprint cycling has recently been shown to be the most

reliable measure of cycling sprint performance.24 Despite this, our

measurement of mean power for the first 5 seconds of each sprint

still provided only small TE for the reliability of the pedal system,

with no significant between-trials differences. In addition, our use

of heterogeneous participants who consequently achieved a wide

variety of peak-power measurements in the incremental protocol

limited the way that these data could practically be analyzed. We

chose to standardize the number of measurements as a proportion

of peak power, to simplify the analysis, but this may have increased

the error we observed. A further limitation of the current study was

that no familiarization trials were performed. It is, however, unlikely

that there was a learning effect across the 2 trials, since the power

output is determined by the ergometer in the incremental protocol.

During the repeated-sprint protocols, there were no significant differences

in the mean power for each sprint, and there was only a

very small difference in the T1 and T2 data recorded by the SRM.

Furthermore, we ensured that all participants had extensive prior

experience with this type of exercise protocol.

Power-output data obtained from ergometry, or laboratory exercise

simulations, present a simple method to allow the quantification

of training load. However, in reality, as a result of the highly variable

and dynamic alterations in power during riding on a road, power

data present a more complex situation for the measurement of load

or training impulse.1 In spite of this, power-output measurements

are extensively used during training and racing situations, and these

pedals provide a more cost-effective method for those wishing to

quantify power. The slightly poorer reliability of the Keo system,

combined with poorer sampling frequency, adds further evidence to

the suggestion that this power-measurement device is not entirely

suitable for those needing precise power data, especially where

the detection of potentially small test–retest differences may be

necessary. Conversely, the evaluation of power data from racing

and training is often organized into time spent in “intensity bins”

for postride evaluation.1 Given the relatively short and wide variety

of activities included in the current study, compared with those

that might be undertaken on the road, the reliability of the Keo

power pedals during constant-rate, longer-duration efforts such as

time trials remains unclear. In spite of this and the findings of the

current study, the novelty of the Keo system may well still make it

an attractive choice for those wishing to increase the information

available to them during training and competition. Future studies

should consider evaluating this system during in-field measurements

against an SRM crank set. This is of particular importance given

that terrain changes may influence power measurements depending

on the location of measurement.8 During climbing, when torque is

high and postural sway is present, power measurement at the pedal

axle may also be different compared with a crank-set power meter,

but this is at present unclear.

Thanks jm1

Ray you got it right.

Great Title and perfect (and not in a good way) case study for critical reading and thinking. Who ever edited that abstract also shares in the culpability here.

ST

awesome post – please consider any supportive Amazon or Clever Training purchases on my behalf contributing to your ‘buying scientific literature’ fund.

Interesting ,a minor personal concern…. you’re writing : “or don’t let a Quarq settle a few rides and re-tighten a bit” Re-thighten what ? The chainringbolts or ?

Yes, correct, the chainring bolts. Generally after the first 2-3 rides this is best with the Quarq units. Vector effectively has something similar with doing a ‘manual calibration’ option after 3-4 hard sprints.

Good article on how a Quarq was drifting from a PT and how the author got them to track better via the Quarq chainring bolt re-torque procedure:

link to slowroadie.com

Doing a before/after static calibration test will tell if the re-torqueing made any difference in your set-up.

Thank you for this post.

Next time, you should ask publicly on Twitter if someone could give you a copy of a scientific article. I think you would get it free in a glimpse. Nope ?

et bonne année !

Excellent work! And excellent comments from Alex Simmons on the issue with cadence sampling: average power is not average torque times average cadence, so your time resolution on power is only as good as the time resolution of the slower value, in this case cadence.

When resampling data (in this case downsampling) always use integrals when they are available and not rates. So resample work and distance and pedal revolutions and altitude and heart beats, not power and speed and cadence and VAM and HR. This is essentially the same as averaging power or speed or RPM or VAM or HR over the sampling interval. This is a really fundamental mistake made by Polar.

Ray, you wrote:

‘Graham – You’re making the same mistake they did. They said “which provided mean data for each 5 seconds of the protocols.” – but, it (the CS600x) fundamentally doesn’t do that. The product doesn’t work that way. It doesn’t average, it’s just a single point in time, every 5th second. Thus, no mean, no average, no median. Just one data point that’s not averaged over five seconds.’

So you are saying Polar works fundamentally differently than say SRM which per Alex “For the SRM, it will average the power value for the most recent number of completed crank cycles that concluded during that time sample period.”

My question again to you is this. Where are you getting that technical detail on how polar powermeter internally works and what power value (point-in-time vs avg) is stored at each recording interval? It certainly is not in the manual as far as I can see. I am simply stating that your interpretation cannot be correct since it would simply indicate totally invalid power data (ie garbage) for longer recording intervals like 15secs or 60secs. BTW, in your interpretation what exactly is power “in a single point in time” anyway? How is it calculated?

Have you contacted Polar to get this sorted out? Hopefully they will provide the level of detail Alex did for SRM in his excellent post above. I continue on this topic out of curiosity to see how this actually works.

Your reviews are great.

Never mind. I went back and see Alan has provided an answer already.

I hope you qualify this with this narrow field. Anything outside of “gadgets” is probably a bit much.

I think critical reading is important, regardless of the topic. Many readers have e-mailed and commented that this is often the case in many journal’s out there. While there are a few that have high standards, some much less so.

So we are filling the gap here :)

Lets assume for a moment that Polar works as it should ie it records power averages (vs “point-in-time”) for each recording interval.

SRM is set to sample at 2Hz and Polar records at 5secs.

Is it possible to have the comparison for 10secs sprint as accurate as if done with Polar set to 1sec recording interval? Yes it is if the comparison is done in the right way and Polar/SRM power files have accurate timestamps for samples.

1) pick 2 consecutive 5sec Polar power samples from file during the sprint period say above 1000W to mark a 10 secs segment for the comparison with SRM.

2) make sure SRM samples just before and after that 10 sec segment are similar in power values next to them (ie power does not dramatically change at the boundary of the selected segment); otherwise, go back to 1) and pick another Polar segment.

3) Do the comparison of the avg power from 2 Polar readings and 20 SRM readings for that segment.

The accuracy of how well SRM aligns to the 10 secs segment picked from Polar readings depends on the sampling rate of SRM and not Polar. At worst, they would be 1/4sec misaligned. It would not matter if Polar was set to 1 sec recording.

It would be foolish to pick an arbitrary 10 secs segment in time and then try to get the avg power from Polar for it since indeed it would not align that well due to 5sec recording interval and be not that accurate. Doing this way would match your analogy with the 100m dash on a track and taking photos 5sec apart. So yeah 1sec would be much better.

Now, I have not read the paper and have no idea how they actually picked the 10secs segment when they did the comparisons for sprints….

In conclusion, keeping Polar at 5sec recording interval does not necessarily invalidate their results. Still, selecting 1sec was obvious and unclear why not used.

The problem is their mistaken assumption that Polar actually works correctly in how it records the power data for 5secs recording interval. They should have called the manufacturer and double check that ;)

This is why I like looking at maximal power curves: maximum power produced for each possible time interval. There’s then no time synchronization/alignment required. However, it does expose the comparison to a bias of more heavily weighting high power values than low values.

Note that if cadence and power are both changing in a correlated way, it can be mathematically demonstrated that the SRM gives the wrong answer, by virtue of the way it assumes constant cadence over a full pedal stroke. The Polar is no better, but to assume they should be both wrong in the same way would be naive.

FYI. They used SRM ergometer (not SRM powermeter):

link to srm.de

“For tests to measure maximum power and physical condition with varying cadence. The cyclist himself determines his power output, as the ErgoMeter keeps the cadence constant.”

“Each SRM ErgoMeter is tested, calibrated and certified for protocol measurements with data accuracy with less than 0.5% error.”

“Braking power from 20 up to 4,200 watts.”

I suspect that this one point may need more clarification Ray. It is not a typical SRM unit that you may be familiar with, but rather a specialized piece of equipment designed for use in research labs with very high precision, and stated accuracy. As you may note, this is the issued for reviewers in that they may like you not note all aspects that could lead to a different set of conclusions. This does not change the facts that the Look Keo is something you are familiar with and that the researchers and reviewers may not have been as familiar as you with its proper use, set up, strengths and weaknesses beyond the fact they made a severe data collection error, due to incorrect assumptions. Still I feel that the journal and authors deserve the opportunity to defend themselves in an appropriate way.

When looking at accuracy in a lab setting – they should have verified it within the lab setting, not after it’s been shipped hundreds of miles and re-installed somewhere else.

As one of the peer reviewers did already, they are more than welcome to post any response here – that’s always welcomed!

My challenge that I have with publishing a formal letter to this publication, is that (aside from taking time I honestly don’t have) I feel it only really serves to increase exposure of the publication as well as the culture of how some peer reviewed publications work.

There are a number of really smart power meter people out there – surely instead of keeping things internal to academia they could have asked someone who knew what they were talking about with these devices. Just as I’ve done with helping other random university/academic projects with setup type questions previously. I’m not saying I can help every one, but there are definitely people out there willing to help – and finding those people isn’t really that hard.

I can suggest that you send the author an e-mail with a link to this discussion and you could probably do the same for the journal. It might open a new discussion, since probably the journal would pass along your analysis to the reviewers? You did spend a fair bit of time already and it would be worth while to let those who are the brunt of the discussion a chance to defend their results. This too is a part of the academic process that is to occur via peer review.

What are the chances this thread hasn’t been brought to the attention of the authors? Probably pretty small. I suspect the only way they would respond is if they think Ray’s arguments are without merit. And it would be really hard to argue the 5sec recording wasn’t just ignorance of how the product works.

Very nice and thorough analysis!

In most technical journals, you can submit a rebuttal to someone’s analysis. This would be a good thing to consider in this case.

This absolutely needs to be emailed to the editor and followed-up on.

Fantastic review. I caught on to and have enjoyed your blog since about a year ago. As a professor of biomechanics I am quite deeply immersed in the academic world. Over the years I have become increasingly disappointed with what gets published and how it happens. Your readers are absolutely right – critical reading is crucial. And you don’t need a PhD to do it – as you have shown. As someone else mentioned, while the authors themselves assume primary responsibility, this paper would have also been reviewed by at least two academic “peers”. I’m embarrassed. It certainly does not reflect well on my profession. Anyway, keep up YOUR great and critical work.

Fantastic review. I caught on to and have enjoyed your blog since about a year ago. As a professor of biomechanics I am quite deeply immersed in the academic world. Over the years I have become increasingly disappointed with what gets published and how it happens. Your readers are absolutely right – critical reading is crucial. And you don’t need a PhD to do it – as you have shown. As someone else mentioned, while the authors themselves assume primary responsibility, this paper would have also been reviewed by at least two academic “peers”. I’m embarrassed. It certainly does not reflect well on my profession. Anyway, keep up YOUR great work.

Hi there,

Thank you for this post. I just read it seven years after you posted it…..

well, I am looking into all the sampling of power meters today, wanted to say that,

In the SRM, I use samples at 200HZ and end-user models at around 50Hz.

They do not allow you to get the Raw Data! They have their algorithm to get the average over 1 sec and send that data to the head unit. They never sample at 1 Hz. We pedal at 1-2 Hz. But we do not pedal at a constant power or torque. For example, for a 500 Watt average, we will get a similar Sin function with 100 Watt to 800 Watt. At 1-2 Hz In a Sin behavior, I would use at least 200Hz sampling. So the SRM is the only one I would use for research, but for end-users, it is just a model behind it, and it is for making money.